.png) xFunnel Team

xFunnel TeamPerplexity AI has been making waves in the tech world for its "answer engine" approach to search. Since its launch in 2022, this San Francisco–based startup has grown rapidly, blending advanced language models, real-time data retrieval, and a user-first design philosophy to redefine what search means. In today's post, we're going to peel back the layers on how Perplexity runs things, dig into the nuts and bolts of its base model and Retrieval-Augmented Generation (RAG) system, and explore how it decides which companies pop up when you're searching for business solutions.

"It's not just about showing a list of links – it's about delivering a concise, context-rich answer that saves you time and gets to the heart of your query."

A Brief History and Recent Developments

Perplexity AI burst onto the scene as a new way to get answers without endless clicking. Backed by heavy hitters like Jeff Bezos and Nvidia, the company initially built its reputation on offering an ad-light, conversational search experience that integrates web data in real time. Over the past year, the platform has seen major enhancements:

Recently, Perplexity started running a self-hosted version of DeepSeek's R1 reasoning model for tasks like summarization and chain-of-thought generation. This helps produce more nuanced and accurate answers while keeping censorship concerns in check by processing data only in the US and Europe (source).

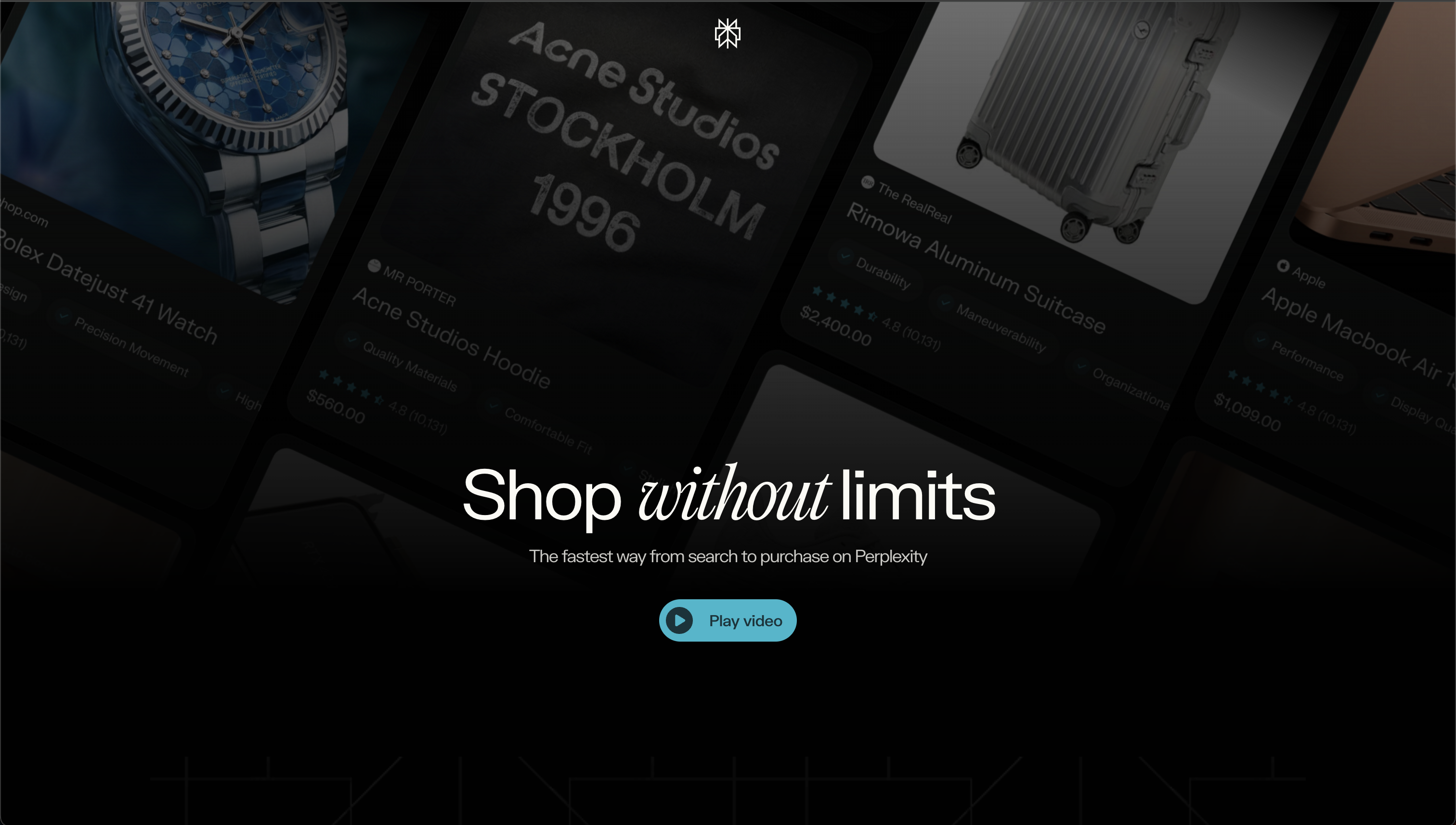

New features like a shopping hub (with "Buy with Pro"), customizable pages, and even collaborations with media giants have set the stage for what many are calling the "death of traditional search" in favor of streamlined, AI-generated answers.

Sources: MARKTECHPOST.COM, REUTERS.COM

How Does Perplexity Run Its Engine?

At its core, Perplexity isn't just a fancy front end for search—it's an intricate system that brings together several modern technologies:

1. Intelligent Crawling and Indexing

Perplexity uses a blend of its own web crawlers and integrations with external search APIs. These crawlers scan the web to collect the most up-to-date and authoritative content. Unlike old-school search engines that simply index pages by keywords, Perplexity's system gathers context-rich snippets that later feed into its language models.

2. Retrieval-Augmented Generation (RAG)

The magic behind Perplexity's "answer engine" is its use of RAG—a method that lets the underlying large language model (LLM) pull in external, real-time information before generating an answer. Here's a simplified breakdown of the process:

RAG Process Flow

- Indexing: Text from webpages (and even internal files for Pro users) is converted into numerical vectors using embedding techniques. These vectors get stored in a specialized database.

- Retrieval: When you ask a question, the system first retrieves the most relevant documents based on the similarity of their vector representations to your query.

- Augmentation: The retrieved documents are then "fed" into the LLM as additional context. This helps the model confirm facts and reduce hallucinations.

- Generation: Finally, the LLM generates an answer that weaves together both its pre-trained knowledge and the freshly retrieved data. This is what makes the responses so current and factually grounded.

Source: EN.WIKIPEDIA.ORG

3. Continuous Model Improvement

The system isn't static. Perplexity continually refines its methods by tweaking how it ranks retrieved documents, re-ranking results with additional signals (like user behavior), and even integrating new models as they become available.

The Base Model and RAG: What's Under the Hood?

Understanding the "brain" of Perplexity means looking at both its base models and its approach to RAG.

Base Model(s)

For its free offering, Perplexity currently relies on a standalone LLM that is built on GPT-3.5 with browsing capabilities. However, the Pro version is a whole other ballgame:

Multiple LLMs in Play:

- GPT-4

- Claude 3.5 Sonnet

- Grok-2

- Llama 3

- Custom in-house LLMs

This multiplicity allows the platform to optimize for different tasks—be it coding help, creative writing, or detailed product research.

DeepSeek R1 Integration

"We only use R1 for the summarization, the chain of thoughts, and the rendering."

Retrieval-Augmented Generation (RAG) Specifics

RAG in Perplexity is more than a buzzword—it's a carefully engineered pipeline:

- Data Preparation: External documents are embedded and stored. This ensures that when a query comes in, the model isn't relying solely on its static training data.

- Dynamic Augmentation: Every query triggers a fresh retrieval of relevant documents. These documents can come from the web, internal databases (for enterprise users), or even partner data sources like media publishers.

- Output Refinement: The LLM uses the retrieved context to generate an answer that is both accurate and well-cited, which is crucial in today's information landscape where trust and verifiability are paramount.

Source: EN.WIKIPEDIA.ORG

How Do They Decide What Companies to Show to Customers Looking for Solutions?

One of the most intriguing aspects of Perplexity's system is how it "decides" what companies and solutions to display when a customer is looking for a product or service. Although the internal algorithms are proprietary, here are some key factors gleaned from various reports and discussions:

1. User Intent and Context

When a user types a query (say, "best CRM solutions for small businesses"), the system first breaks down the intent behind the question. Perplexity's "focus" feature even allows users to narrow results to specific categories—like academic, social, or business. This means the engine can tailor its response based on whether the user is looking for an in-depth technical analysis or just a quick recommendation.

Source: PERPLEXITY.AI

2. Authority and Trustworthiness

Perplexity places heavy emphasis on sourcing information from reputable and authoritative websites. For business queries, the engine looks at multiple signals:

- Reputation Scores: Companies that are frequently cited by trustworthy sources (think major publications or industry experts) tend to rank higher.

- User-Generated Feedback: Discussions on platforms like Reddit and reviews also play a role. If a company is widely recommended by real users, the system is more likely to include it.

- Partnerships and Revenue-Sharing Deals: In some cases, companies have formal agreements with Perplexity (or its partner networks) which might influence the ordering or inclusion of their solutions. For example, integrations with media partners help Perplexity incorporate additional context about companies and their products.

Source: NOGOOD.IO

3. Relevance and Freshness

Because Perplexity uses RAG, it continuously updates its responses based on the latest available information. This ensures that if a company has recently launched a groundbreaking solution, it has a better chance of appearing in the results. The retrieval process also takes into account the recency of the data and its alignment with the query's context.

4. Algorithmic Ranking

While the exact ranking algorithm is a trade secret, we know that it's a blend of classic IR (information retrieval) techniques combined with modern deep learning-based ranking. Factors like keyword matching, semantic relevance, and even user engagement metrics are likely part of the equation. This holistic approach allows Perplexity to go beyond mere keyword matching and actually understand the nuances of what a customer is looking for.

SEO, AEO, and the Future of Answer Engines

As AI-powered search tools continue to evolve, so too must our digital marketing strategies. Marketers are already learning how to optimize their content for platforms like Perplexity. Here are a few takeaways:

- ✓

- Content Authority: High-quality, authoritative content is key. Since Perplexity values reputable sources, brands should strive to get featured on sites that the engine trusts.

- Conversational Optimization: The shift to "answer engines" means content must be structured in a conversational way. Detailed FAQs, clear headers, and natural language explanations help ensure your brand's information is picked up.

- User Intent Analysis: Understanding the different types of user intent (informational, navigational, transactional, and commercial) can guide you in creating content that ranks well on these new platforms.

Source: NOGOOD.IO

Final Thoughts

Perplexity AI is more than just a search engine—it's a glimpse into the future of how we interact with information. By harnessing advanced language models, RAG, and sophisticated ranking algorithms, the platform offers a streamlined, conversation-like search experience that breaks away from the traditional "list of links" paradigm. Whether you're a tech enthusiast curious about the inner workings of AI or a business looking to optimize your digital presence, understanding Perplexity's operational model, base LLMs, and its unique approach to ranking companies is essential.

I'm honestly amazed at how quickly the landscape is shifting—even though sometimes I wish I could double-check a few facts (hey, no system is perfect!). But one thing's for sure: as these technologies evolve, the way we find solutions online is going to be forever changed.

If you have thoughts or questions about Perplexity AI's inner workings or its impact on search and digital marketing, drop a comment below. Let's keep the conversation as lively and inquisitive as the technology itself!